The Map Processing Report gives you better insights into how your map has processed through DroneDeploy. The report highlights key information, points out flight discrepancies, and brings forth factors that may need to be adjusted or addressed in order to make the best possible map.

Access the processing report by visiting the Map Details section of your project.

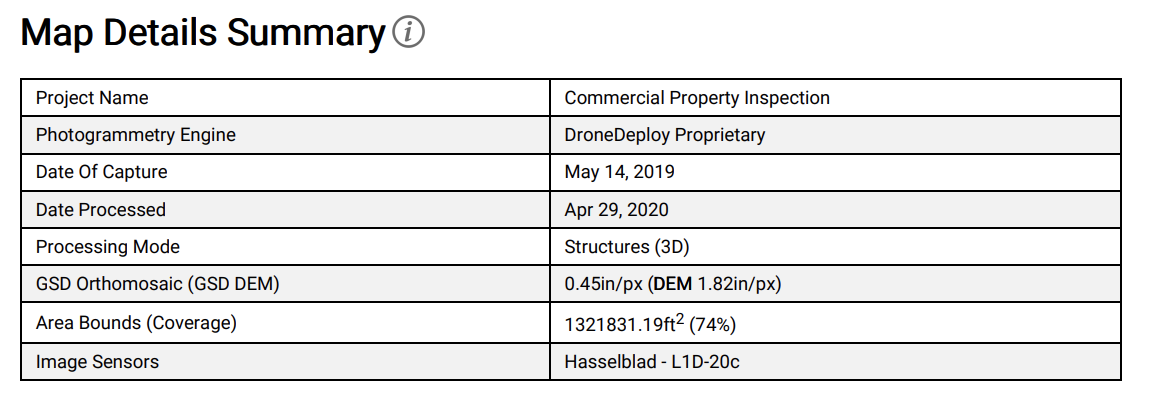

Map Details Summary

The map details summary will give an overview of some of the key details of the map at the time of processing.

| Row Name | Definition |

|---|---|

| Project Name | Name given to the project at the time of processing. |

| Photogrammetry Engine | The engine used to process the map. DroneDeploy uses our own proprietary algorithm so this should be the default engine. |

| Date of Capture | Date flown. The date will be derived from the reported drone EXIF data at the time of flight. |

| Date Processed | Date the images were processed (or reprocessed) in DroneDeploy |

| GSD Orthomosaic | Ground Sample Distance. We have a threshold for map resolution that controls computing the GSD parameter. When a drone is flying very close to the ground, the minimum resolution is set and the map is generated based on that value. |

| Area Bounds (Coverage) | Area of the processed map and percent coverage of selected region of interest. |

| Image Sensors | Camera used for the flight. |

| Average GPS Trust | Average regional trust for GPS. Unit is meters. |

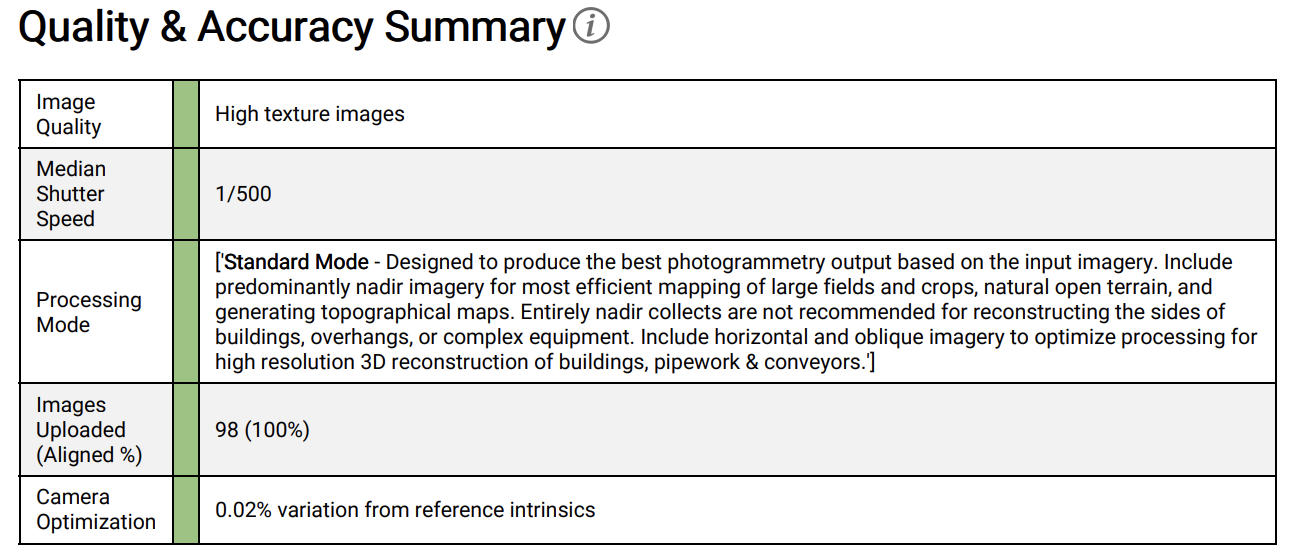

Quality & Accuracy Summary

The quality and accuracy summary will provide insight into possible problem areas in the processing of the map. Expected values will be marked in green, and possible problem areas will be marked in orange.

Example of the Quality & Accuracy Summary Section.

| Row | Definition |

|---|---|

| Image Quality | Represents the image quality for stitching. A high texture image will have crisp, detailed features that are easily recognizable. A low texture image would be blurry, over-exposed, or smooth. Thermal images are often low texture. |

| Median Shutter Speed | Shutter speed for the camera. <1/80 is likely to produce motion blur and could result in incorrect scale or measurements. |

| Images Upload (Aligned %) | This is the number of images that provided the correct data needed to be used in processing. Unaligned images could be lacking EXIF data, or not provide the proper overlap for the subject in the image. |

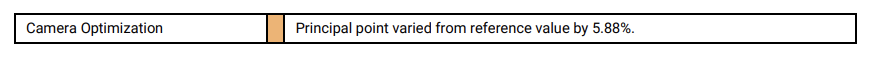

| Camera Optimization |

Principle Point: The initial values provided to us from the EXIF data may be adjusted in photogrammetry if they deviate from the expected values. A high variation from principle point can be raised to support@dronedeploy.com Focal Length: The focal length is estimated during the photogrammetry process. If it varies more than 5% for the reference value, that could indicate a problem with the lens or camera. The use of zoom during mapping, or inclusion of zoomed images from the same camera can cause the focal length to be calculated incorrectly. |

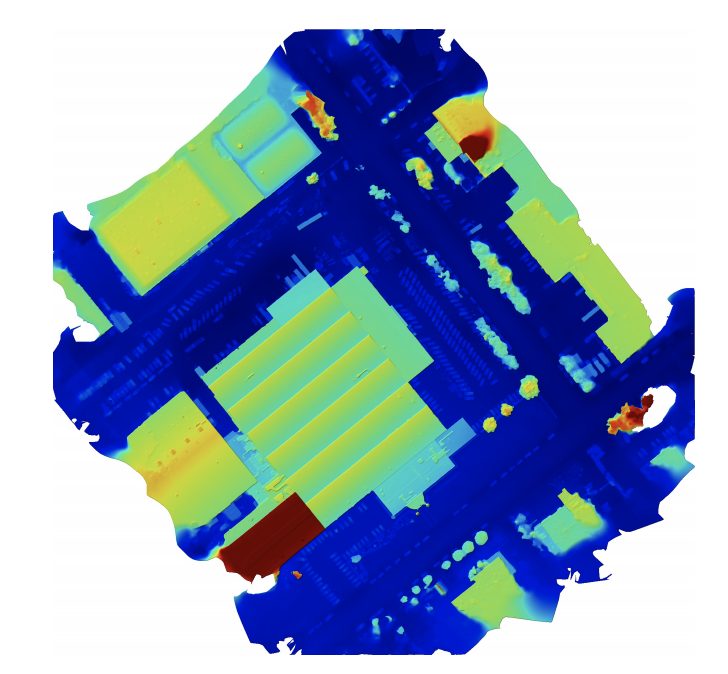

Preview

This section provides a preview of the processed orthomosaic as well as the elevation profile of the processed map.

Processed Orthomosaic

Elevation Profile

Dataset Quality Review

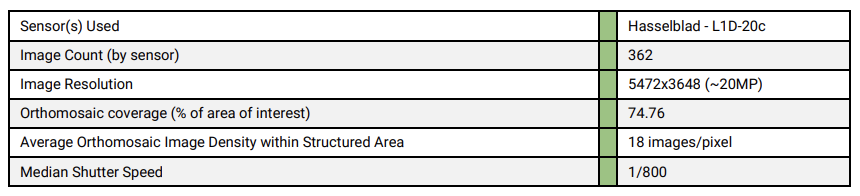

Input GPS Uncertainty: This plot represents the input of the GPS on the image. This plot will only appear on RTK or PPK maps. This represents the reported "error" from the source image and represents the image's accuracy pre-processing. Large ellipses in this plot could indicate poor or low RTK/PPK connectivity during flight and ultimately lead to inaccuracies in your map.

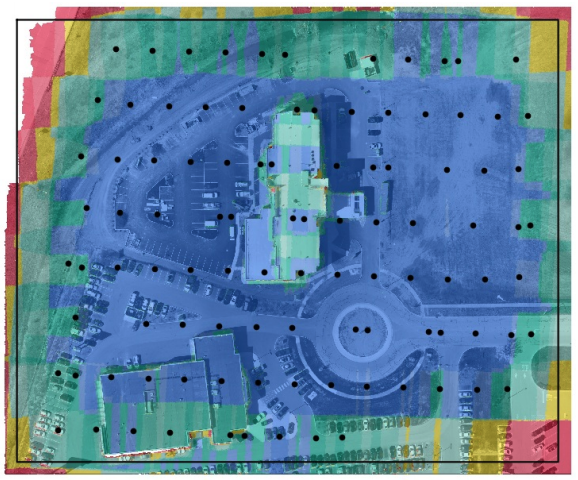

Orthomosaic Coverage

This provides some of the most key information into the processing potential of your map. In this section, you will see black dots representing each of the images collected for the site. You may also see yellow squares representing GPS-aligned images. If an image is depicted by a yellow square, it means the image can not tie into the rest of the scene using the standard algorithm, and metadata in EXIF is used to place the image.

Photogrammetry works by matching key points across multiple photos in order to understand the relative position of all the cameras. Because of this, providing the drone camera several high-quality images of every point on every surface is key to producing a good map and 3D model. This is created via "overlap" or, how much image overlaps in content with the neighboring images.

This can also be referred to as image density. For the best possible DroneDeploy processing, we recommend an image density of 8-9 images per pixel. (This roughly equates to 75/70 overlap.)

In this map, I would recommend increasing the overlap of the flight, or using our enhanced 3D mode to achieve better overlap on the rooftop of the building. This map can also be cropped to remove any poor stitching from the edges, which we would expect in the red and yellow areas.

ROI: Region of Interest

Aligned: Images that were successfully geolocated and aligned.

A great map is be expected to be primarily blue. Red areas will indicate places that need a) higher altitude and/or b) more overlap. Note: For best results, we recommend flying with the DroneDeploy flight app.

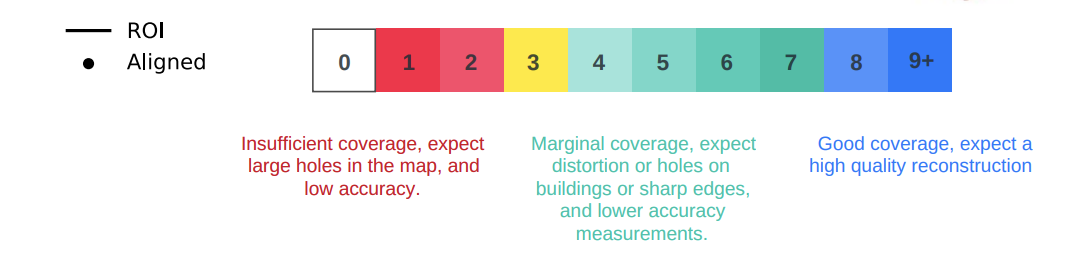

Another important table within the dataset quality review shows information such as the sensor used during the mission, image count and resolution, and percent coverage.

| Row | Definition |

|---|---|

| Sensor(s) Used | Camera and sensor used for the flight. |

| Image Count (by sensor) | Images taken per sensor used during mission. |

| Image Resolution | Average resolution of all images in the uploaded image set. |

| Orthomosaic coverage (% of area of interest) | Average coverage of region of interest. This takes into account images/pixel. |

| Average Orthomosaic Image Density within Structured Area | Another measure of coverage of the region of interest taking into account individual image density. |

| Median Shutter Speed | Shutter speed for the camera. <1/80 is likely to produce motion blur and could result in incorrect scale or measurements. |

Pairs Connectivity

If map processing fails before the Orthomosaic Coverage plot could be generated, the Pairs Connectivity plot, if available, is shown instead. In this plot, each dot presents each of the images collected for the site and each line connects two pictures that are connected with each other. It has the same color code as the Orthomosaic Coverage plot.

Solved Camera Locations plot, if available, will be shown in conjunction with Pairs Connectivity to assist diagnosis. It displays the location and altitude information and whether each image is successfully structured.

Structure from Motion

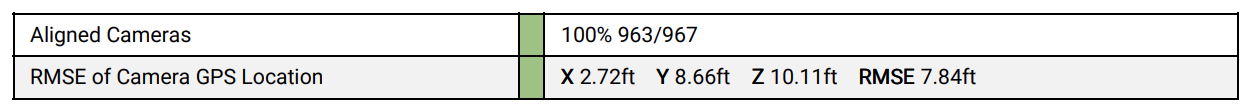

This diagram shows the GPS alignment versus the actual alignment of images taken during the mission. You are looking for a smaller error in this diagram for the best results. This may look similar to the GPS Uncertainty plot, but this plot represents post-processing results.

Aligned Cameras: For each image taken, DroneDeploy uses image content to place the camera in 3D space. Additionally, the metadata of the images is used to geolocation image and thus the final map. 100% alignment means all of the uploaded images were used in the reconstruction of the map. Unaligned cameras (red X's on the Quality Review set) will indicate camera locations that were not able to be located in 3D and therefore not used in the reconstruction.

Unaligned images often do not provide the visual data needed to successfully stitch (i.e. water, homogenous data, moving trees, insufficient overlap to match other images).

RMSE or Root Mean Squared Error: The camera location XYZ root mean squared error (RMSE) is a measure of the spread of the error of the solved camera XYZ versus the location specified by the GPS value recorded in the images. Therefore, as an example, a 10ft (3m) Camera Location XYZ RMSE means that in general, the solved XYZ image location should be within 10ft of the supplied GPS location.

*Please note that camera location error does not correspond to the true accuracy of a map. For example, poor GPS conditions can cause large camera location errors but if images are properly collected the processed map will still be highly accurate when you measure distances and volumes in a small part of the map. To truly measure map accuracy you must include checkpoints or an object with known dimensions that can be measured in the processed map to check for differences.

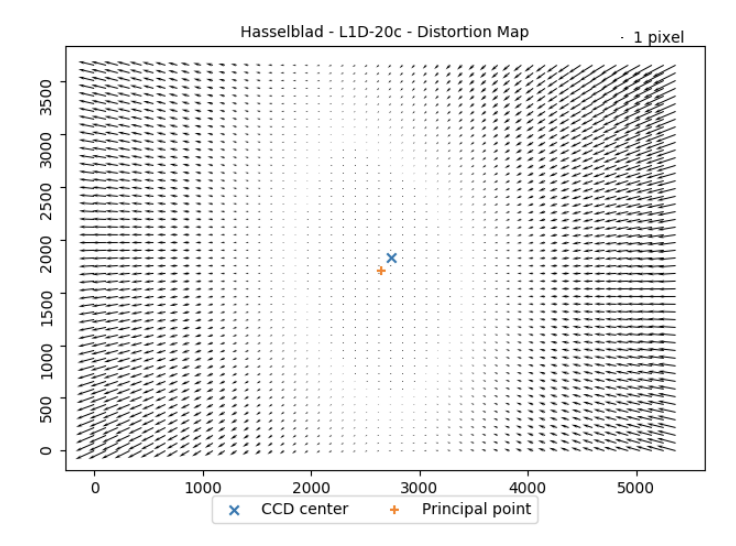

Camera Calibration

In an ideal work world, the camera calibration diagram would be symmetrical, and blue/red crosshairs are close to each other or overlapping.

In the above diagram, it would be ideal for the red and blue crosshairs to be directly on top of each other.

When the camera calibration is off by more than 5%, a warning is triggered.

Principle Point: The initial values provided to us from the EXIF data may be adjusted in photogrammetry if they deviate from the expected values. A high variation from principle point can be raised to support@dronedeploy.com

Focal Length: The focal length is estimated during the photogrammetry process. If it varies more than 5% for the reference value, that could indicate a problem with the lens or camera. The use of zoom during mapping, or inclusion of zoomed images from the same camera can cause the focal length to be calculated incorrectly.

Densification and Meshing

Additional key information is found within the densification and meshing table. Expected values will be marked in green, and possible problem areas will be marked in orange.

| Row | Definition |

|---|---|

| Processing Mode Quality | Quality that was selected for the processing of the map. Turbo vs. Quality. |

| Nadir Images | Percentage of nadir imagery within the image set. |

| Oblique Images | Percentage of oblique imagery within the image set. |

| Horizontal Images | Percentage of horizontal imagery within the image set. Horizontal imagery can cause issues with reconstruction if they contain views of the horizon or sky. |

| Total Points | The number of points in the point cloud. Similar to the fidelity of the point cloud. |

| Point Cloud Density | This is Total Points divided by the area of covered pixels in the orthomosaic. It is an approximation of the total density. |

| Mesh Triangles | The number of faces in the mesh. Similar to the fidelity of the mesh. |

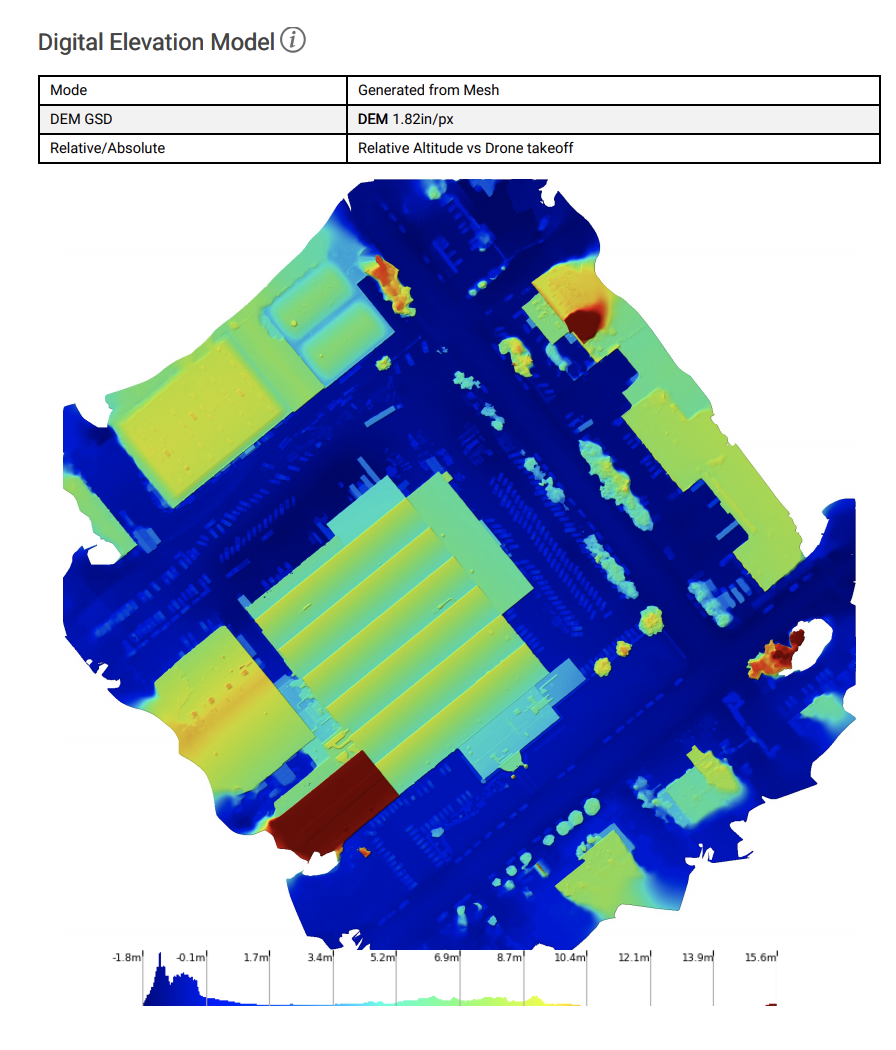

Digital Elevation Model (DEM)

This section of the Map processing Report focuses on the digital elevation model and provides useful insight into how the elevation model was created and what data was used to stitch and calculate the elevation.

An example of the Digital Elevation Model section of the Map Processing Report.

| Row | Definition |

|---|---|

| Mode | The mode used to process the map and put the DEM together. DroneDeploy uses our own algorithm, "Generated from Mesh" so this should be the default mode. |

| DEM GSD | GSD stands for “ground sample distance” This is the size of each pixel in the DEM. |

| Relative/Absolute | Relative/Absolute tells us what the elevations are calculated based on. Most of the time it will be the absolute altitude. |

GCPs and Checkpoints

If you have included GCPs on your map, they will also be outlined in the Map Processing Report.

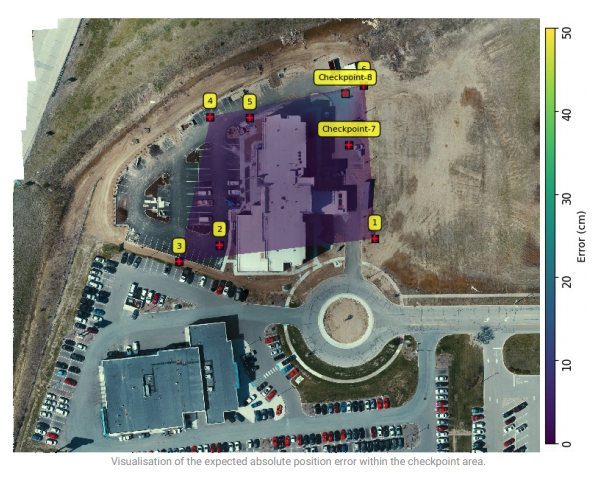

The controlled area is shaded, and if you are using checkpoints, we highlight the measured error and predict how it may manifest across the map.

GCP Alignment Method:

- Transform - < 3 GCPs: We can only safely move the map in XYZ.

- Similarity Transform - High trust GPS input data (RTK/PPK) with GCPs: Each photo is like its own "control point". As GCPs are often in a different datum to the drone data, we conduct a 3D transformation to minimize the distance to the GCPs without warping the map (only translation, rotation, and scale). This process optimizes for robust accuracy across the entire map, for example between GCPs and at Checkpoint locations, but can result in a slight increase in the RMSE of the average GCP.

- Similarity Transform & Bundle Adjustment - Low trust GPS input data (Standard GPS) with GCPs: Move the cameras capturing the scene to align with the GCP datum roughly, then use GCPs in the photogrammetry process to adjust the camera parameters and constrain the camera locations.

Map Alignment

Dronedeploy maps that do not use GCPs but are captured of the same location are automatically aligned with previous days. This section (if it appears) will describe the before and after, along with the required transformation.

Find out more here:

Automatic Map Alignment